Last fall, NVIDIA announced the launch of a new processor DPU. Huang Renxun’s original words were: “The data center has become a new type of computing unit. In a modern, secure accelerated data center, the DPU has become an important part. CPU. The combination of , GPU and DPU can form a fully programmable single AI computing unit, providing unprecedented security and computing power.”

As a dedicated processor for data centers, is DPU really expected to become the third computing power chip after CPU and GPU?

Why do you need a DPU?

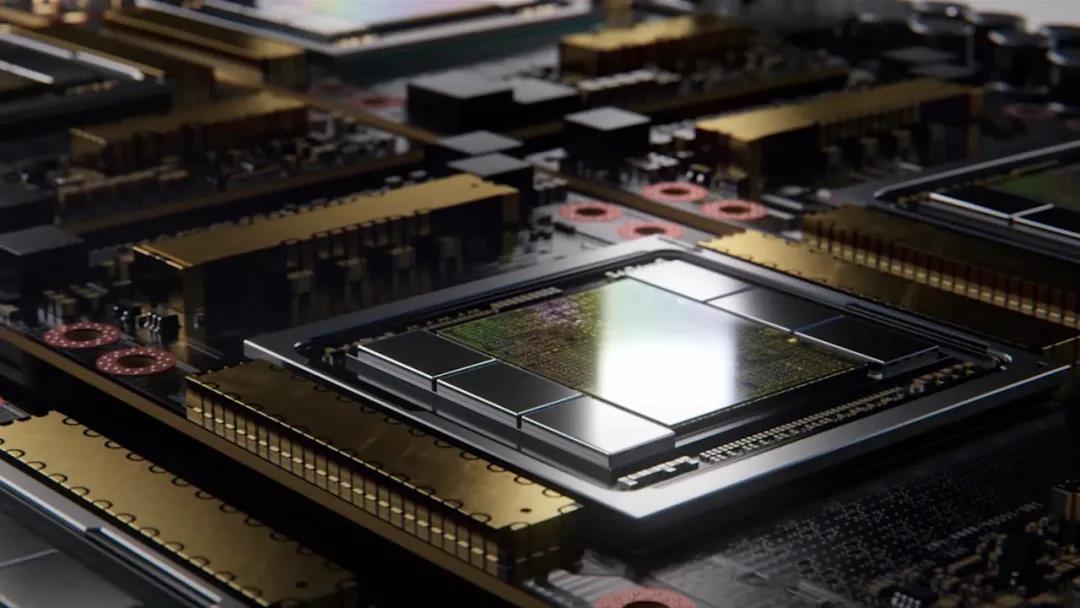

Source | PC Magazine

It has become the norm in most data centers that the CPU is responsible for general computing and the GPU is responsible for accelerated computing. The GPU, which is used to accelerate computing, separates computing-intensive tasks from the CPU. The CPU continues to exert its general computing and logical computing capabilities, and transfers tasks such as parallel computing, machine learning, and AI computing to the GPU for processing.

As the data center moves toward software-defined, it will become more flexible and more burdened, and the operation of the infrastructure will consume 20%-30% of CPU cores. This also means that the new division of labor system requires more subdivided “types of work” to “reduce the burden” on the CPU.

Just as the development of GPU is driven by the needs of graphics and images, the background of DPU (Data Processing Unit, data processor) is the demand for computing delay, data security, and resource virtualization under the trend of device-edge-cloud integration. It is critical for the next generation of large-scale computing on the cloud.

Who are the domestic and foreign players?

In fact, not only DPUs, but also SmartNICs want to take a share in the transformation of the basic network architecture. We all know that the network interconnection between data center servers has a set of underlying software systems. This software needs to be able to complete the network interconnection protocol. In addition, it also needs to be able to run a set of network security systems necessary for the data center. Traditionally, this processing has also run on the CPU, and with the increasing popularity of SmartNICs, it is slowly replacing the CPU in network security and network protocol processing.

The Alveo U25 launched by Xilinx last year is an integrated SmartNIC with a built-in programmable FPGA, which realizes the integration of network, storage and computing acceleration functions on a single device.

DPU can be regarded as an enhanced version of SmartNIC. On the one hand, it strengthens SmartNIC’s processing capabilities for network protocols and network security, and on the other hand, it integrates and strengthens the processing capabilities of distributed storage, so that DPU can be used in these two fields. replace the CPU.

This will be a field that is about to set off a storm, packed with players who are gearing up. According to incomplete statistics, domestic and foreign players in this market include major manufacturers such as Intel, NVIDIA, Broadcom, Marvell, as well as start-ups such as Fungible and Pensando, among which several major manufacturers have made several acquisitions in recent years. are also developing in this area.

From the technical route point of view, each scheme is different. Intel and Broadcom are both for switches and router chips. Intel is based on FPGA, and Broadcom is based on Arm architecture. NVIDIA focuses on data security, network, and storage offload, mainly based on the acquired Mellanox network solution and Arm architecture. Marvell is mainly for 5G bandwidth. The programmable chip technology and Arm architecture formed through the acquisition of Cavium; Pensando and Fungible are two startups, the former is oriented to SDN supporting P4, and the solution is mainly implemented through software-defined network processors, while the latter is oriented to network, storage, virtualization, The solution is based on the MIPS architecture.

Domestic manufacturers have not disclosed much in this field. Huawei’s smart network cards belong to the subdivision of DPU, but the smart network card chips have not been officially announced; Alibaba, it is understood that there are products with relatively primitive DPU; Yushu, just recently announced the next-generation DPU chip plan.

Impact the 100 billion DPU market

Domestic chips accelerate growth

According to Fungible and Nvidia’s forecast, the DPU used in the data center will reach the same level as the data center server. About 10 million new servers are added every year. A server may not have a GPU, but there must be one or more DPUs, just like every server must be equipped with a network card. About 15 million new servers are added every year. If each DPU is calculated at 10,000 yuan, it will be a market size of 100 billion.

In retrospect, the founding team of Zhongke Yushu was considered to have developed DPU chips earlier in China. The company’s founder and CEO Dr. Yan Guihai, co-founder and CTO Dr. Lu Wenyan, and chief scientist Dr. Li Xiaowei are all from the State Key Laboratory of Computer Architecture, Institute of Computing Technology, Chinese Academy of Sciences. They proposed the software-defined accelerator technology (Software Defined Accelerator), independently developed the KPU (Kernel Processing Unit) chip architecture, and designed the industry’s first database and time series data processing fusion acceleration chip in 2019, which has been successfully taped out. Zhongke Yushu’s DPU chip is based on the self-developed KPU chip architecture, with core functions such as network protocol processing, database and big data processing acceleration, storage computing, and secure encryption computing.

From KPU architecture to DPU chips, what are the key advantages of Zhongke Yushu?

Dr. Yan Guihai introduced that compared with the traditional ASIC or SoC DPU chip architecture, the KPU has higher flexibility, and can define the internal data operation logic of the chip through instant software configuration. While ensuring sufficient computing power, the lowest power consumption Support more computing load types. Its KPU is positioned as an “agile and heterogeneous” dedicated computing architecture. Compared with CPU, GPU, FPGA, and ASIC, the KPU-Drive solution has advantages in terms of computing power, energy efficiency ratio (TCO), algorithm flexibility, marginal cost, and development cycle. The advantage is obvious.

At present, Zhongke Yushu has accumulated 8 categories of KPU kernel resources, covering time series analysis, data query, encryption and decryption, data compression, protocol analysis, etc., and has completed two generations of KPU iterations in the past two years. KPU has also evolved from the initial single application algorithm acceleration to an all-round three-dimensional acceleration system integrating network, database and application algorithms.

Compared with similar schemes, does Zhongke Yushu have unique advantages?

It is understood that the types of similar DPU solutions can be roughly summarized into three types: one is a homogeneous many-core DPU based on general-purpose many-core, such as Broadcom’s Stingray architecture, with multi-core Arm as the core, winning by the crowd, and more programmable flexibility. Good, but the application specificity is not enough, and the support for special algorithms and applications has no significant advantages compared with general-purpose CPUs; the second is a heterogeneous core array based on dedicated cores, which is characterized by strong pertinence. , performance is better, but some flexibility is sacrificed; the third route is a compromise between the above two, and the proportion of dedicated cores is increasing, and it is becoming the latest product trend. From the perspective of NVIDIA’s BlueField2 series DPU, It includes 4 Arm cores and multiple dedicated acceleration core areas. Fungible’s DPU contains 6 types of dedicated cores and 52 MIPS small general-purpose cores.

“Different from foreign chip manufacturers such as Broadcom and Fungible, the DPU of Zhongke Yushu does not use the original many-core-based architecture, but focuses on heterogeneous cores, with targeted algorithm acceleration as the core, and uses the KPU architecture to achieve Organize heterogeneous cores. Under the KPU architecture, Zhongke Yushu has developed a chip-level complete L2/L3/L4 layer full network protocol processing core, and launched a data query processing core directly oriented to OLAP, OLTP and SQL-like processing”, Yan Guihai introduced.

The benefits of this are more efficient data processing efficiency, more direct use interfaces, and better virtualization support. These features are suitable for high-bandwidth, low-latency, Data-intensive computing scenarios are especially important.

With the KPU architecture as the core, on the basis of the first chip K1 in 2019, the next chip K2 of Zhongke Yushu is expected to be taped out by the end of this year. From the current exposure of the chip architecture, the functional level includes L2/L3/L4 layer network protocol processing, which can process 200G network bandwidth data; it integrates database and big data processing capabilities, and is directly oriented to OLAP, OLTP and big data processing platforms. Such as Spark, etc.; in addition, it also includes machine learning computing cores and secure encryption computing cores.

Zhongke Yushu K2 chip architecture